Vision-language modeling grounds language understanding in corresponding visual inputs, which can be useful for the development of important products and tools. For example, an image captioning model generates natural language descriptions based on its understanding of a given image. While there are various challenges to such cross-modal work, significant progress has been made in the past few years on vision-language modeling thanks to the adoption of effective vision-language pre-training (VLP). This approach aims to learn a single feature space from both visual and language inputs, rather than learning two separate feature spaces, one each for visual inputs and another for language inputs. For this purpose, existing VLP often leverages an object detector, like Faster R-CNN, trained on labeled object detection datasets to isolate regions-of-interest (ROI), and relies on task-specific approaches (i.e., task-specific loss functions) to learn representations of images and texts jointly. Such approaches require annotated datasets or time to design task-specific approaches, and so, are less scalable.

To address this challenge, in “SimVLM: Simple Visual Language Model Pre-training with Weak Supervision”, we propose a minimalist and effective VLP, named SimVLM, which stands for “Simple Visual Language Model”. SimVLM is trained end-to-end with a unified objective, similar to language modeling, on a vast amount of weakly aligned image-text pairs (i.e., the text paired with an image is not necessarily a precise description of the image). The simplicity of SimVLM enables efficient training on such a scaled dataset, which helps the model to achieve state-of-the-art performance across six vision-language benchmarks. Moreover, SimVLM learns a unified multimodal representation that enables strong zero-shot cross-modality transfer without fine-tuning or with fine-tuning only on text data, including for tasks such as open-ended visual question answering, image captioning and multimodal translation.

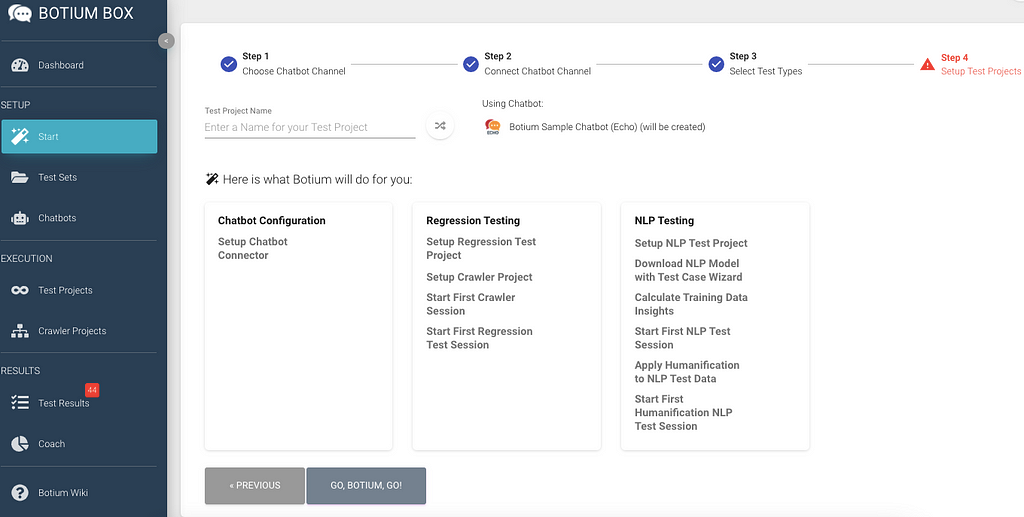

Model and Pre-training Procedure

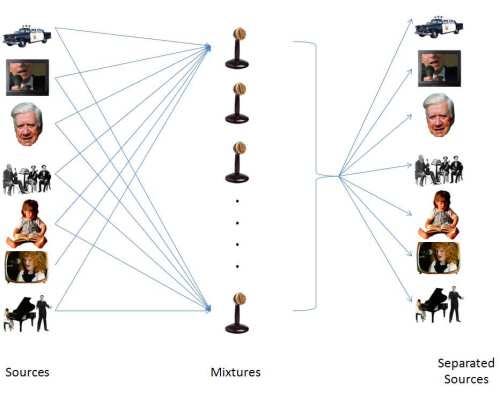

Unlike existing VLP methods that adopt pre-training procedures similar to masked language modeling (like in BERT), SimVLM adopts the sequence-to-sequence framework and is trained with a one prefix language model (PrefixLM) objective, which receives the leading part of a sequence (the prefix) as inputs, then predicts its continuation. For example, given the sequence “A dog is chasing after a yellow ball”, the sequence is randomly truncated to “A dog is chasing” as the prefix, and the model will predict its continuation. The concept of a prefix similarly applies to images, where an image is divided into a number of “patches”, then a subset of those patches are sequentially fed to the model as inputs—this is called an “image patch sequence”. In SimVLM, for multimodal inputs (e.g., images and their captions), the prefix is a concatenation of both the image patch sequence and prefix text sequence, received by the encoder. The decoder then predicts the continuation of the textual sequence. Compared to prior VLP models combining several pre-training losses, the PrefixLM loss is the only training objective and significantly simplifies the training process. This approach for SimVLM maximizes its flexibility and universality in accommodating different task setups.

Finally, due to its success for both language and vision tasks, like BERT and ViT, we adopt the Transformer architecture as the backbone of our model, which, unlike prior ROI-based VLP approaches, enables the model to directly take in raw images as inputs. Moreover, inspired by CoAtNet, we adopt a convolution stage consisting of the first three blocks of ResNet in order to extract contextualized patches, which we find more advantageous than the naïve linear projection in the original ViT model. The overall model architecture is illustrated below.

|

| Overview of the SimVLM model architecture. |

The model is pre-trained on large-scale web datasets for both image-text and text-only inputs. For joint vision and language data, we use the training set of ALIGN which contains about 1.8B noisy image-text pairs. For text-only data, we use the Colossal Clean Crawled Corpus (C4) dataset introduced by T5, totaling 800G web-crawled documents.

Benchmark Results

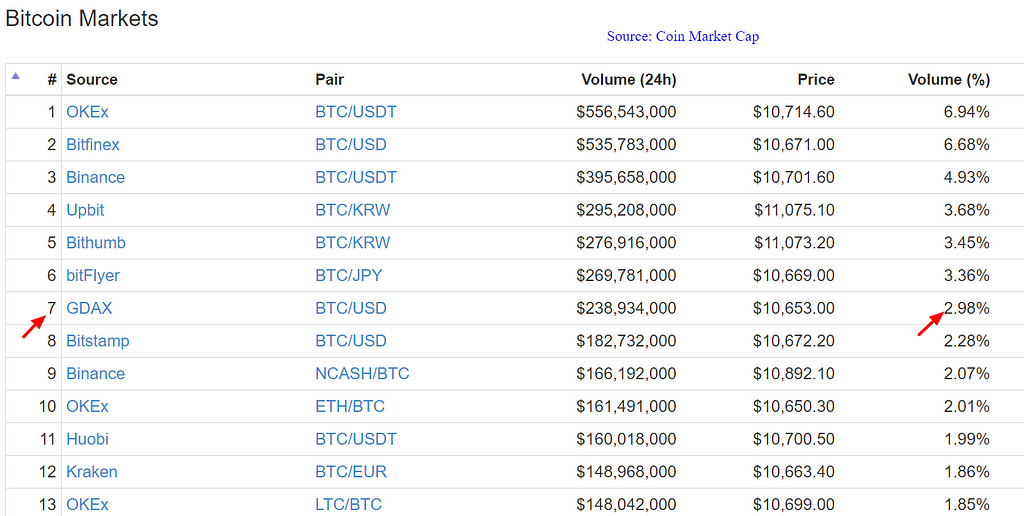

After pre-training, we fine-tune our model on the following multimodal tasks: VQA, NLVR2, SNLI-VE, COCO Caption, NoCaps and Multi30K En-De. For example, for VQA the model takes an image and corresponding questions about the input image, and generates the answer as output. We evaluate SimVLM models of three different sizes (base: 86M parameters, large: 307M and huge: 632M) following the same setup as in ViT. We compare our results with strong existing baselines, including LXMERT, VL-T5, UNITER, OSCAR, Villa, SOHO, UNIMO, VinVL, and find that SimVLM achieves state-of-the-art performance across all these tasks despite being much simpler.

| VQA | NLVR2 | SNLI-VE | CoCo Caption | |||||||||||

| Model | test-dev | test-std | dev | test-P | dev | test | B@4 | M | C | S | ||||

| LXMERT | 72.4 | 72.5 | 74.9 | 74.5 | - | - | - | - | - | - | ||||

| VL-T5 | - | 70.3 | 74.6 | 73.6 | - | - | - | - | 116.5 | - | ||||

| UNITER | 73.8 | 74 | 79.1 | 80 | 79.4 | 79.4 | - | - | - | - | ||||

| OSCAR | 73.6 | 73.8 | 79.1 | 80.4 | - | - | 41.7 | 30.6 | 140 | 24.5 | ||||

| Villa | 74.7 | 74.9 | 79.8 | 81.5 | 80.2 | 80 | - | - | - | - | ||||

| SOHO | 73.3 | 73.5 | 76.4 | 77.3 | 85 | 85 | - | - | - | - | ||||

| UNIMO | 75.1 | 75.3 | - | - | 81.1 | 80.6 | 39.6 | - | 127.7 | - | ||||

| VinVL | 76.6 | 76.6 | 82.7 | 84 | - | - | 41 | 31.1 | 140.9 | 25.2 | ||||

| SimVLM base | 77.9 | 78.1 | 81.7 | 81.8 | 84.2 | 84.2 | 39 | 32.9 | 134.8 | 24 | ||||

| SimVLM large | 79.3 | 79.6 | 84.1 | 84.8 | 85.7 | 85.6 | 40.3 | 33.4 | 142.6 | 24.7 | ||||

| SimVLM huge | 80 | 80.3 | 84.5 | 85.2 | 86.2 | 86.3 | 40.6 | 33.7 | 143.3 | 25.4 | ||||

| Evaluation results on a subset of 6 vision-language benchmarks in comparison with existing baseline models. Metrics used above (higher is better): BLEU-4 (B@4), METEOR (M), CIDEr (C), SPICE (S). Similarly, evaluation on NoCaps and Multi30k En-De also show state-of-the-art performance. |

Zero-Shot Generalization

Since SimVLM has been trained on large amounts of data from both visual and textual modalities, it is interesting to ask whether it is capable of performing zero-shot cross-modality transfer. We examine the model on multiple tasks for this purpose, including image captioning, multilingual captioning, open-ended VQA and visual text completion. We take the pre-trained SimVLM and directly decode it for multimodal inputs with fine-tuning only on text data or without fine-tuning entirely. Some examples are given in the figure below. It can be seen that the model is able to generate not only high-quality image captions, but also German descriptions, achieving cross-lingual and cross-modality transfer at the same time.

|

| Examples of SimVLM zero-shot generalization. (a) Zero-shot image captioning: Given an image together with text prompts, the pre-trained model predicts the content of the image without fine-tuning. (b) zero-shot cross-modality transfer on German image captioning: The model generates captions in German even though it has never been fine-tuned on image captioning data in German. (c) Generative VQA: The model is capable of generating answers outside the candidates of the original VQA dataset. (d) Zero-shot visual text completion: The pre-trained model completes a textual description grounded on the image contents; (e) Zero-shot open-ended VQA: The model provides factual answers to the questions about images, after continued pre-training on the WIT dataset. Images are from NoCaps, which come from the Open Images dataset under the CC BY 2.0 license. |

To quantify SimVLM’s zero-shot performance, we take the pre-trained, frozen model and decode it on the COCO Caption and NoCaps benchmarks, then compare with supervised baselines. Even without supervised fine-tuning (in the middle-rows), SimVLM can reach zero-shot captioning quality close to the quality of supervised methods.

|

| Zero shot image captioning results. Here “Pre.” indicates the model is pre-trained and “Sup.” means the model is finetuned on task-specific supervision. For NoCaps, [In, Near, Out] refer to in-domain, near-domain and out-of-domain respectively. We compare results from BUTD, AoANet, M2 Transformer, OSCAR and VinVL. Metrics used above (higher is better): BLEU-4 (B@4), METEOR (M), CIDEr (C), SPICE (S). For NoCaps, CIDEr numbers are reported. |

Conclusion

We propose a simple yet effective framework for VLP. Unlike prior work using object detection models and task-specific auxiliary losses, our model is trained end-to-end with a single prefix language model objective. On various vision-language benchmarks, this approach not only obtains state-of-the-art performance, but also exhibits intriguing zero-shot behaviors in multimodal understanding tasks.

Acknowledgements

We would like to thank Jiahui Yu, Adams Yu, Zihang Dai, Yulia Tsvetkov for preparation of the SimVLM paper, Hieu Pham, Chao Jia, Andrew Dai, Bowen Zhang, Zhifeng Chen, Ruoming Pang, Douglas Eck, Claire Cui and Yonghui Wu for helpful discussions, Krishna Srinivasan, Samira Daruki, Nan Du and Aashi Jain for help with data preparation, Jonathan Shen, Colin Raffel and Sharan Narang for assistance on experimental settings, and others on the Brain team for support throughout this project.

Source: SimVLM: Simple Visual Language Model Pre-training with Weak Supervision

Date: 15 October 2021, 2:14 pm

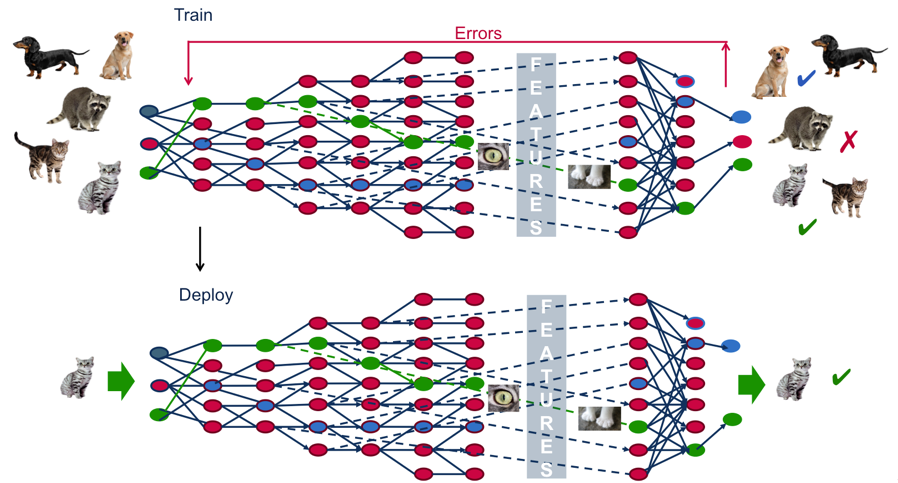

Machine learning (ML) is increasingly being used in real-world applications, so understanding the uncertainty and robustness of a model is necessary to ensure performance in practice. For example, how do models behave when deployed on data that differs from the data on which they were trained? How do models signal when they are likely to make a mistake?

To get a handle on an ML model's behavior, its performance is often measured against a baseline for the task of interest. With each baseline, researchers must try to reproduce results only using descriptions from the corresponding papers , which results in serious challenges for replication. Having access to the code for experiments may be more useful, assuming it is well-documented and maintained. But even this is not enough, because the baselines must be rigorously validated. For example, in retrospective analyses over a collection of works [1, 2, 3], authors often find that a simple well-tuned baseline outperforms more sophisticated methods. In order to truly understand how models perform relative to each other, and enable researchers to measure whether new ideas in fact yield meaningful progress, models of interest must be compared to a common baseline.

In “Uncertainty Baselines: Benchmarks for Uncertainty & Robustness in Deep Learning”, we introduce Uncertainty Baselines, a collection of high-quality implementations of standard and state-of-the-art deep learning methods for a variety of tasks, with the goal of making research on uncertainty and robustness more reproducible. The collection spans 19 methods across nine tasks, each with at least five metrics. Each baseline is a self-contained experiment pipeline with easily reusable and extendable components and with minimal dependencies outside of the framework in which it is written. The included pipelines are implemented in TensorFlow, PyTorch, and Jax. Additionally, the hyperparameters for each baseline have been extensively tuned over numerous iterations so as to provide even stronger results.

Uncertainty Baselines

As of this writing, Uncertainty Baselines provides a total of 83 baselines, comprising 19 methods encompassing standard and more recent strategies over nine datasets. Example methods include BatchEnsemble, Deep Ensembles, Rank-1 Bayesian Neural Nets, Monte Carlo Dropout, and Spectral-normalized Neural Gaussian Processes. It acts as a successor in merging several popular benchmarks in the community: Can You Trust Your Model's Uncertainty?, BDL benchmarks, and Edward2's baselines.

| Dataset | Inputs | Output | Train Examples | Test Datasets |

| CIFAR | RGB images | 10-class distribution | 50,000 | 3 |

| ImageNet | RGB images | 1000-class distribution | 1,281,167 | 6 |

| CLINC Intent Detection | Dialog system query text | 150-class distribution (in 10 domains) | 15,000 | 2 |

| Kaggle's Diabetic Retinopathy Detection | RGB images | Probability of Diabetic Retinopathy | 35,126 | 1 |

| Wikipedia Toxicity | Wikipedia comment text | Probability of toxicity | 159,571 | 3 |

A subset of 5 out of 9 available datasets for which baselines are provided. The datasets span tabular, text, and image modalities.

Uncertainty Baselines sets up each baseline under a choice of base model, training dataset, and a suite of evaluation metrics. Each is then tuned over its hyperparameters to maximize performance on such metrics. The available baselines vary among these three axes:

- Base models (architectures) include Wide ResNet 28-10, ResNet-50, BERT, and simple fully-connected networks.

- Training datasets include standard machine learning datasets (CIFAR, ImageNet, and UCI) as well as more real-world problems (Clinc Intent Detection, Kaggle’s Diabetic Retinopathy Detection, and Wikipedia Toxicity).

- Evaluation includes predictive metrics (e.g., accuracy), uncertainty metrics (e.g., selective prediction and calibration error), compute metrics (inference latency), and performance on in- and out-of-distribution datasets.

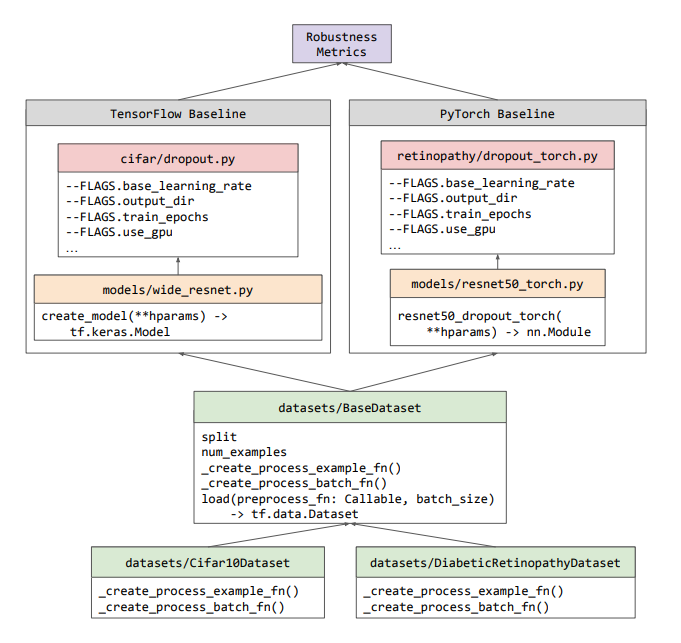

Modularity and Reusability

In order for researchers to use and build on the baselines, we deliberately optimized them to be as modular and minimal as possible. As seen in the workflow figure below, Uncertainty Baselines introduces no new class abstractions, instead reusing classes that pre-exist in the ecosystem (e.g., TensorFlow’s tf.data.Dataset). The train/evaluation pipeline for each of the baselines is contained in a standalone Python file for that experiment, which can run on CPU, GPU, or Google Cloud TPUs. Because of this independence between baselines, we are able to develop baselines in any of TensorFlow, PyTorch or JAX.

|

| Workflow diagram for how the different components of Uncertainty Baselines are structured. All datasets are subclasses of the BaseDataset class, which provides a simple API for use in baselines written with any of the supported frameworks. The outputs from any of the baselines can then be analyzed with the Robustness Metrics library. |

One area of debate among research engineers is how to manage hyperparameters and other experiment configuration values, which can easily number in the dozens. Instead of using one of the many frameworks built for this, and risk users having to learn yet another library, we opted to simply use Python flags, i.e., flags defined using Abseil that follow Python conventions. This should be a familiar technique to most researchers, and is easy to extend and plug into other pipelines.

Reproducibility

In addition to being able to run each of our baselines using the documented commands and get the same reported results, we also aim to release hyperparameter tuning results and final model checkpoints for further reproducibility. Right now we only have these fully open-sourced for the Diabetic Retinopathy baselines, but we will continue to upload more results as we run them. Additionally, we have examples of baselines that are exactly reproducible up to hardware determinism.

Practical Impact

Each of the baselines included in our repository has gone through extensive hyperparameter tuning, and we hope that researchers can readily reuse this effort without the need for expensive retraining or retuning. Additionally, we hope to avoid minor differences in the pipeline implementations affecting baseline comparisons.

Uncertainty Baselines has already been used in numerous research projects. If you are a researcher with other methods or datasets you would like to contribute, please open a GitHub issue to start a discussion!

Acknowledgements

We would like to thank a number of folks who are codevelopers, provided guidance, and/or helped review this post: Neil Band, Mark Collier, Josip Djolonga, Michael W. Dusenberry, Sebastian Farquhar, Angelos Filos, Marton Havasi, Rodolphe Jenatton, Ghassen Jerfel, Jeremiah Liu, Zelda Mariet, Jeremy Nixon, Shreyas Padhy, Jie Ren, Tim G. J. Rudner, Yeming Wen, Florian Wenzel, Kevin Murphy, D. Sculley, Balaji Lakshminarayanan, Jasper Snoek, Yarin Gal.

Over the past 20 months, the COVID-19 pandemic has had a profound impact on daily life, presented logistical challenges for businesses planning for supply and demand, and created difficulties for governments and organizations working to support communities with timely public health responses. While there have been well-studied epidemiology models that can help predict COVID-19 cases and deaths to help with these challenges, this pandemic has generated an unprecedented amount of real-time publicly-available data, which makes it possible to use more advanced machine learning techniques in order to improve results.

In "A prospective evaluation of AI-augmented epidemiology to forecast COVID-19 in the USA and Japan", accepted to npj Digital Medicine, we continued our previous work [1, 2, 3, 4] and proposed a framework designed to simulate the effect of certain policy changes on COVID-19 deaths and cases, such as school closings or a state-of-emergency at a US-state, US-county, and Japan-prefecture level, using only publicly-available data. We conducted a 2-month prospective assessment of our public forecasts, during which our US model tied or outperformed all other 33 models on COVID19 Forecast Hub. We also released a fairness analysis of the performance on protected sub-groups in the US and Japan. Like other Google initiatives to help with COVID-19 [1, 2, 3], we are releasing daily forecasts based on this work to the public for free, on the web [us, ja] and through BigQuery.

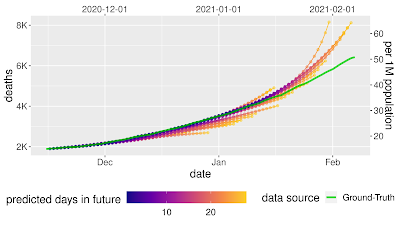

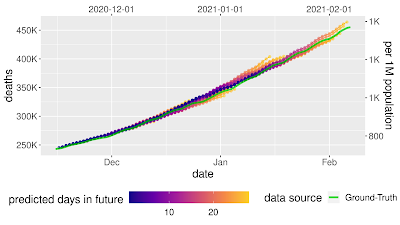

|

The Model

Models for infectious diseases have been studied by epidemiologists for decades. Compartmental models are the most common, as they are simple, interpretable, and can fit different disease phases effectively. In compartmental models, individuals are separated into mutually exclusive groups, or compartments, based on their disease status (such as susceptible, exposed, or recovered), and the rates of change between these compartments are modeled to fit the past data. A population is assigned to compartments representing disease states, with people flowing between states as their disease status changes.

In this work, we propose a few extensions to the Susceptible-Exposed-Infectious-Removed (SEIR) type compartmental model. For example, susceptible people becoming exposed causes the susceptible compartment to decrease and the exposed compartment to increase, with a rate that depends on disease spreading characteristics. Observed data for COVID-19 associated outcomes, such as confirmed cases, hospitalizations and deaths, are used for training of compartmental models.

Our framework proposes a number of novel technical innovations:

- Learned transition rates: Instead of using static rates for transitions between compartments across all locations and times, we use machine-learned rates to map them. This allows us to take advantage of the vast amount of available data with informative signals, such as Google's COVID-19 Community Mobility Reports, healthcare supply, demographics, and econometrics features.

- Explainability: Our framework provides explainability for decision makers, offering insights on disease propagation trends via its compartmental structure, and suggesting which factors may be most important for driving compartmental transitions.

- Expanded compartments: We add hospitalization, ICU, ventilator, and vaccine compartments and demonstrate efficient training despite data sparsity.

- Information sharing across locations: As opposed to fitting to an individual location, we have a single model for all locations in a country (e.g., >3000 US counties) with distinct dynamics and characteristics, and we show the benefit of transferring information across locations.

- Seq2seq modeling: We use a sequence-to-sequence model with a novel partial teacher forcing approach that minimizes amplified growth of errors into the future.

Forecast Accuracy

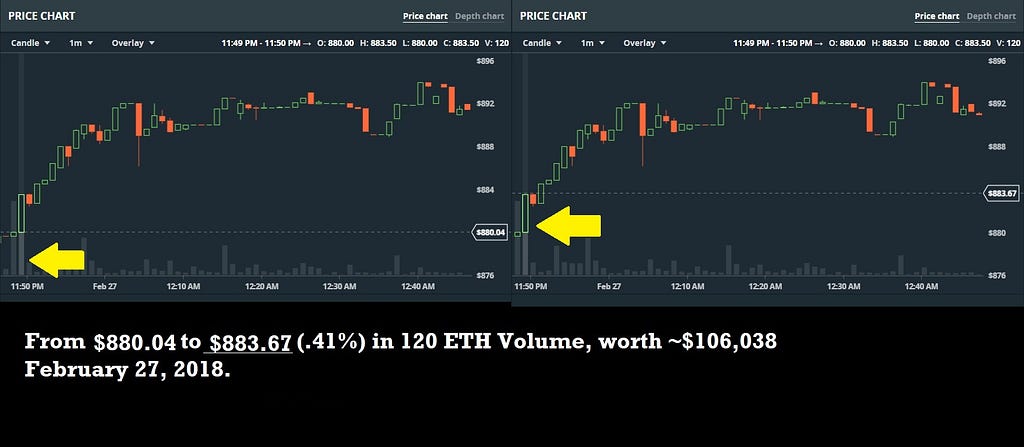

Each day, we train models to predict COVID-19 associated outcomes (primarily deaths and cases) 28 days into the future. We report the mean absolute percentage error (MAPE) for both a country-wide score and a location-level score, with both cumulative values and weekly incremental values for COVID-19 associated outcomes.

We compare our framework with alternatives for the US from the COVID19 Forecast Hub. In MAPE, our models outperform all other 33 models except one — the ensemble forecast that also includes our model’s predictions, where the difference is not statistically significant.

We also used prediction uncertainty to estimate whether a forecast is likely to be accurate. If we reject forecasts that the model considers uncertain, we can improve the accuracy of the forecasts that we do release. This is possible because our model has well-calibrated uncertainty.

|

| Mean average percentage error (MAPE, the lower the better) decreases as we remove uncertain forecasts, increasing accuracy. |

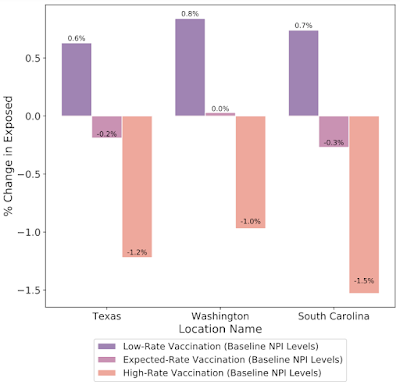

What-If Tool to Simulate Pandemic Management Policies and Strategies

In addition to understanding the most probable scenario given past data, decision makers are interested in how different decisions could affect future outcomes, for example, understanding the impact of school closures, mobility restrictions and different vaccination strategies. Our framework allows counterfactual analysis by replacing the forecasted values for selected variables with their counterfactual counterparts. The results of our simulations reinforce the risk of prematurely relaxing non-pharmaceutical interventions (NPIs) until the rapid disease spreading is reduced. Similarly, the Japan simulations show that maintaining the State of Emergency while having a high vaccination rate greatly reduces infection rates.

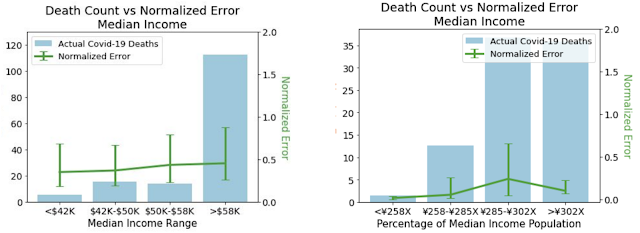

Fairness Analysis

To ensure that our models do not create or reinforce unfairly biased decision making, in alignment with our AI Principles, we performed a fairness analysis separately for forecasts in the US and Japan by quantifying whether the model's accuracy was worse on protected sub-groups. These categories include age, gender, income, and ethnicity in the US, and age, gender, income, and country of origin in Japan. In all cases, we demonstrated no consistent pattern of errors among these groups once we controlled for the number of COVID-19 deaths and cases that occur in each subgroup.

Real-World Use Cases

In addition to quantitative analyses to measure the performance of our models, we conducted a structured survey in the US and Japan to understand how organisations were using our model forecasts. In total, seven organisations responded with the following results on the applicability of the model.

- Organization type: Academia (3), Government (2), Private industry (2)

- Main user job role: Analyst/Scientist (3), Healthcare professional (1), Statistician (2), Managerial (1)

- Location: USA (4), Japan (3)

- Predictions used: Confirmed cases (7), Death (4), Hospitalizations (4), ICU (3), Ventilator (2), Infected (2)

- Model use case: Resource allocation (2), Business planning (2), scenario planning (1), General understanding of COVID spread (1), Confirm existing forecasts (1)

- Frequency of use: Daily (1), Weekly (1), Monthly (1)

- Was the model helpful?: Yes (7)

To share a few examples, in the US, the Harvard Global Health Institute and Brown School of Public Health used the forecasts to help create COVID-19 testing targets that were used by the media to help inform the public. The US Department of Defense used the forecasts to help determine where to allocate resources, and to help take specific events into account. In Japan, the model was used to make business decisions. One large, multi-prefecture company with stores in more than 20 prefectures used the forecasts to better plan their sales forecasting, and to adjust store hours.

Limitations and next steps

Our approach has a few limitations. First, it is limited by available data, and we are only able to release daily forecasts as long as there is reliable, high-quality public data. For instance, public transportation usage could be very useful but that information is not publicly available. Second, there are limitations due to the model capacity of compartmental models as they cannot model very complex dynamics of Covid-19 disease propagation. Third, the distribution of case counts and deaths are very different between the US and Japan. For example, most of Japan's COVID-19 cases and deaths have been concentrated in a few of its 47 prefectures, with the others experiencing low values. This means that our per-prefecture models, which are trained to perform well across all Japanese prefectures, often have to strike a delicate balance between avoiding overfitting to noise while getting supervision from these relatively COVID-19-free prefectures.

We have updated our models to take into account large changes in disease dynamics, such as the increasing number of vaccinations. We are also expanding to new engagements with city governments, hospitals, and private organizations. We hope that our public releases continue to help public and policy-makers address the challenges of the ongoing pandemic, and we hope that our method will be useful to epidemiologists and public health officials in this and future health crises.

Acknowledgements

This paper was the result of hard work from a variety of teams within Google and collaborators around the globe. We'd especially like to thank our paper co-authors from the School of Medicine at Keio University, Graduate School of Public Health at St Luke’s International University, and Graduate School of Medicine at The University of Tokyo.

In recent years, there has been increasing interest in applying deep learning to medical imaging tasks, with exciting progress in various applications like radiology, pathology and dermatology. Despite the interest, it remains challenging to develop medical imaging models, because high-quality labeled data is often scarce due to the time-consuming effort needed to annotate medical images. Given this, transfer learning is a popular paradigm for building medical imaging models. With this approach, a model is first pre-trained using supervised learning on a large labeled dataset (like ImageNet) and then the learned generic representation is fine-tuned on in-domain medical data.

Other more recent approaches that have proven successful in natural image recognition tasks, especially when labeled examples are scarce, use self-supervised contrastive pre-training, followed by supervised fine-tuning (e.g., SimCLR and MoCo). In pre-training with contrastive learning, generic representations are learned by simultaneously maximizing agreement between differently transformed views of the same image and minimizing agreement between transformed views of different images. Despite their successes, these contrastive learning methods have received limited attention in medical image analysis and their efficacy is yet to be explored.

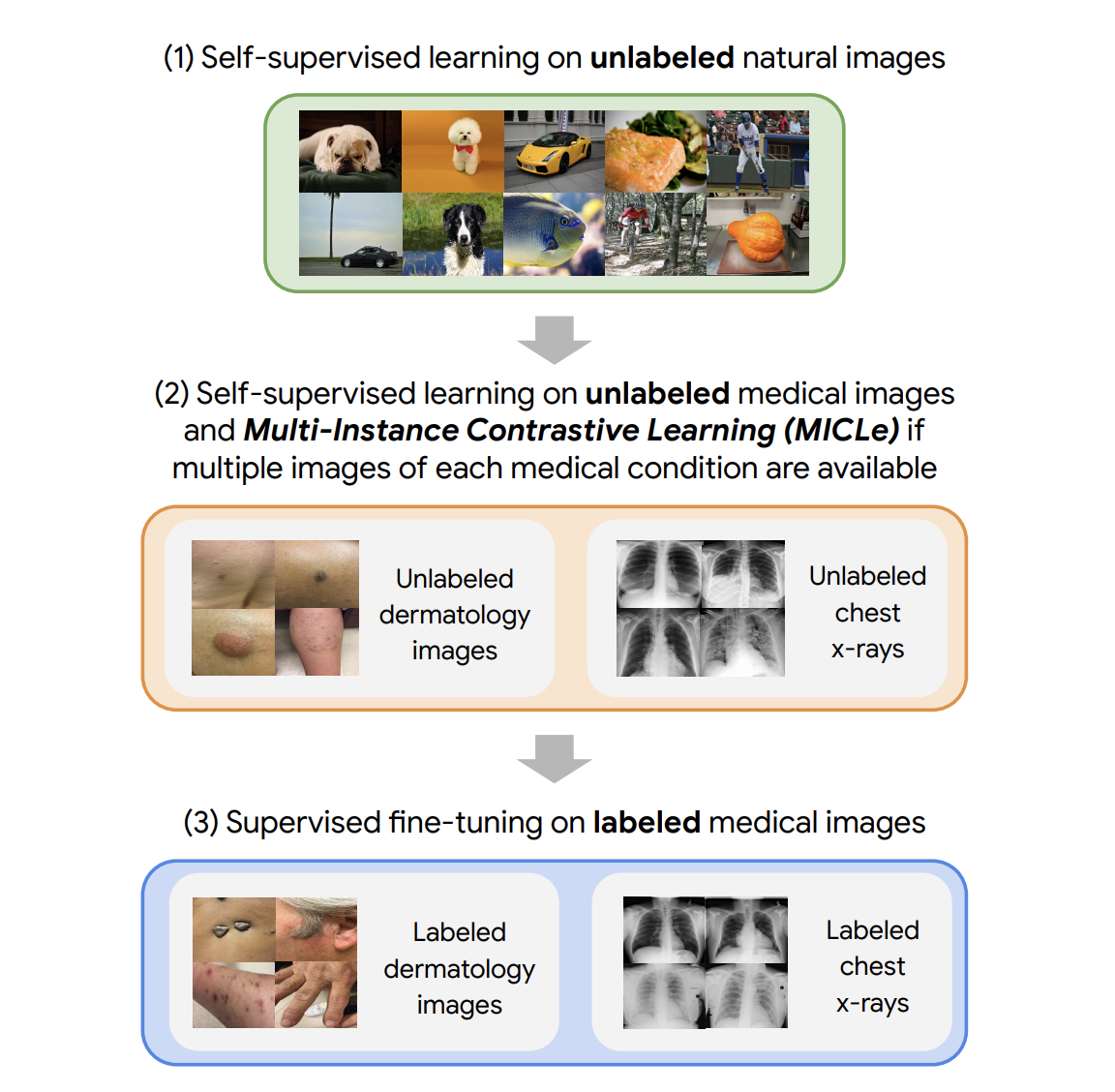

In “Big Self-Supervised Models Advance Medical Image Classification”, to appear at the International Conference on Computer Vision (ICCV 2021), we study the effectiveness of self-supervised contrastive learning as a pre-training strategy within the domain of medical image classification. We also propose Multi-Instance Contrastive Learning (MICLe), a novel approach that generalizes contrastive learning to leverage special characteristics of medical image datasets. We conduct experiments on two distinct medical image classification tasks: dermatology condition classification from digital camera images (27 categories) and multilabel chest X-ray classification (5 categories). We observe that self-supervised learning on ImageNet, followed by additional self-supervised learning on unlabeled domain-specific medical images, significantly improves the accuracy of medical image classifiers. Specifically, we demonstrate that self-supervised pre-training outperforms supervised pre-training, even when the full ImageNet dataset (14M images and 21.8K classes) is used for supervised pre-training.

SimCLR and Multi Instance Contrastive Learning (MICLe)

Our approach consists of three steps: (1) self-supervised pre-training on unlabeled natural images (using SimCLR); (2) further self-supervised pre-training using unlabeled medical data (using either SimCLR or MICLe); followed by (3) task-specific supervised fine-tuning using labeled medical data.

|

| Our approach comprises three steps: (1) Self-supervised pre-training on unlabeled ImageNet using SimCLR (2) Additional self-supervised pre-training using unlabeled medical images. If multiple images of each medical condition are available, a novel Multi-Instance Contrastive Learning (MICLe) strategy is used to construct more informative positive pairs based on different images. (3) Supervised fine-tuning on labeled medical images. Note that unlike step (1), steps (2) and (3) are task and dataset specific. |

After the initial pre-training with SimCLR on unlabeled natural images is complete, we train the model to capture the special characteristics of medical image datasets. This, too, can be done with SimCLR, but this method constructs positive pairs only through augmentation and does not readily leverage patients' meta data for positive pair construction. Alternatively, we use MICLe, which uses multiple images of the underlying pathology for each patient case, when available, to construct more informative positive pairs for self-supervised learning. Such multi-instance data is often available in medical imaging datasets — e.g., frontal and lateral views of mammograms, retinal fundus images from each eye, etc.

Given multiple images of a given patient case, MICLe constructs a positive pair for self-supervised contrastive learning by drawing two crops from two distinct images from the same patient case. Such images may be taken from different viewing angles and show different body parts with the same underlying pathology. This presents a great opportunity for self-supervised learning algorithms to learn representations that are robust to changes of viewpoint, imaging conditions, and other confounding factors in a direct way. MICLe does not require class label information and only relies on different images of an underlying pathology, the type of which may be unknown.

Combining these self-supervised learning strategies, we show that even in a highly competitive production setting we can achieve a sizable gain of 6.7% in top-1 accuracy on dermatology skin condition classification and an improvement of 1.1% in mean AUC on chest X-ray classification, outperforming strong supervised baselines pre-trained on ImageNet (the prevailing protocol for training medical image analysis models). In addition, we show that self-supervised models are robust to distribution shift and can learn efficiently with only a small number of labeled medical images.

Comparison of Supervised and Self-Supervised Pre-training

Despite its simplicity, we observe that pre-training with MICLe consistently improves the performance of dermatology classification over the original method of pre-training with SimCLR under different pre-training dataset and base network architecture choices. Using MICLe for pre-training, translates to (1.18 ± 0.09)% increase in top-1 accuracy for dermatology classification over using SimCLR. The results demonstrate the benefit accrued from utilizing additional metadata or domain knowledge to construct more semantically meaningful augmentations for contrastive pre-training. In addition, our results suggest that wider and deeper models yield greater performance gains, with ResNet-152 (2x width) models often outperforming ResNet-50 (1x width) models or smaller counterparts.

Improved Generalization with Self-Supervised Models

For each task we perform pretraining and fine-tuning using the in-domain unlabeled and labeled data respectively. We also use another dataset obtained in a different clinical setting as a shifted dataset to further evaluate the robustness of our method to out-of-domain data. For the chest X-ray task, we note that self-supervised pre-training with either ImageNet or CheXpert data improves generalization, but stacking them both yields further gains. As expected, we also note that when only using ImageNet for self-supervised pre-training, the model performs worse compared to using only in-domain data for pre-training.

To test the performance under distribution shift, for each task, we held out additional labeled datasets for testing that were collected under different clinical settings. We find that the performance improvement in the distribution-shifted dataset (ChestX-ray14) by using self-supervised pre-training (both using ImageNet and CheXpert data) is more pronounced than the original improvement on the CheXpert dataset. This is a valuable finding, as generalization under distribution shift is of paramount importance to clinical applications. On the dermatology task, we observe similar trends for a separate shifted dataset that was collected in skin cancer clinics and had a higher prevalence of malignant conditions. This demonstrates that the robustness of the self-supervised representations to distribution shifts is consistent across tasks.

Improved Label Efficiency

We further investigate the label-efficiency of the self-supervised models for medical image classification by fine-tuning the models on different fractions of labeled training data. We use label fractions ranging from 10% to 90% for both Derm and CheXpert training datasets and examine how the performance varies using the different available label fractions for the dermatology task. First, we observe that pre-training using self-supervised models can compensate for low label efficiency for medical image classification, and across the sampled label fractions, self-supervised models consistently outperform the supervised baseline. These results also suggest that MICLe yields proportionally higher gains when fine-tuning with fewer labeled examples. In fact, MICLe is able to match baselines using only 20% of the training data for ResNet-50 (4x) and 30% of the training data for ResNet152 (2x).

Conclusion

Supervised pre-training on natural image datasets is commonly used to improve medical image classification. We investigate an alternative strategy based on self-supervised pre-training on unlabeled natural and medical images and find that it can significantly improve upon supervised pre-training, the standard paradigm for training medical image analysis models. This approach can lead to models that are more accurate and label efficient and are robust to distribution shifts. In addition, our proposed Multi-Instance Contrastive Learning method (MICLe) enables the use of additional metadata to create realistic augmentations, yielding further performance boost of image classifiers.

Self-supervised pre-training is much more scalable than supervised pre-training because class label annotation is not required. We hope this paper will help popularize the use of self-supervised approaches in medical image analysis yielding label efficient and robust models suited for clinical deployment at scale in the real world.

Acknowledgements

This work involved collaborative efforts from a multidisciplinary team of researchers, software engineers, clinicians, and cross-functional contributors across Google Health and Google Brain. We thank our co-authors: Basil Mustafa, Fiona Ryan, Zach Beaver, Jan Freyberg, Jon Deaton, Aaron Loh, Alan Karthikesalingam, Simon Kornblith, Ting Chen, Vivek Natarajan, and Mohammad Norouzi. We also thank Yuan Liu from Google Health for valuable feedback and our partners for access to the datasets used in the research.

Source: Self-Supervised Learning Advances Medical Image Classification

Date: 13 October 2021, 12:01 pm

The International Conference on Computer Vision 2021 (ICCV 2021), one of the world's premier conferences on computer vision, starts this week. A Champion Sponsor and leader in computer vision research, Google will have a strong presence at ICCV 2021 with more than 50 research presentations and involvement in the organization of a number of workshops and tutorials.

If you are attending ICCV this year, we hope you’ll check out the work of our researchers who are actively pursuing the latest innovations in computer vision. Learn more about our research being presented in the list below (Google affilitation in bold).

Organizing Committee

Diversity and Inclusion Chair: Negar Rostamzadeh

Area Chairs: Andrea Tagliasacchi, Boqing Gong, Ce Liu, Dilip Krishnan, Jordi Pont-Tuset, Michael Rubinstein, Michael S. Ryoo, Negar Rostamzadeh, Noah Snavely, Rodrigo Benenson, Tsung-Yi Lin, Vittorio Ferrari

Publications

MosaicOS: A Simple and Effective Use of Object-Centric Images for Long-Tailed Object Detection

Cheng Zhang, Tai-Yu Pan, Yandong Li, Hexiang Hu, Dong Xuan, Soravit Changpinyo, Boqing Gong, Wei-Lun Chao

Learning to Resize Images for Computer Vision Tasks

Hossein Talebi, Peyman Milanfar

Joint Representation Learning and Novel Category Discovery on Single- and Multi-Modal Data

Xuhui Jia, Kai Han, Yukun Zhu, Bradley Green

Explaining in Style: Training a GAN to Explain a Classifier in StyleSpace

Oran Lang, Yossi Gandelsman, Michal Yarom, Yoav Wald, Gal Elidan, Avinatan Hassidim, William T. Freeman, Phillip Isola, Amir Globerson, Michal Irani, Inbar Mosseri

Learning Fast Sample Re-weighting without Reward Data

Zizhao Zhang, Tomas Pfister

Contrastive Multimodal Fusion with TupleInfoNCE

Yunze Liu, Qingnan Fan, Shanghang Zhang, Hao Dong, Thomas Funkhouser, Li Yi

Learning Temporal Dynamics from Cycles in Narrated Video

Dave Epstein*, Jiajun Wu, Cordelia Schmid, Chen Sun

Patch Craft: Video Denoising by Deep Modeling and Patch Matching

Gregory Vaksman, Michael Elad, Peyman Milanfar

How to Train Neural Networks for Flare Removal

Yicheng Wu*, Qiurui He, Tianfan Xue, Rahul Garg, Jiawen Chen, Ashok Veeraraghavan, Jonathan T. Barron

Learning to Reduce Defocus Blur by Realistically Modeling Dual-Pixel Data

Abdullah Abuolaim*, Mauricio Delbracio, Damien Kelly, Michael S. Brown, Peyman Milanfar

Hybrid Neural Fusion for Full-Frame Video Stabilization

Yu-Lun Liu, Wei-Sheng Lai, Ming-Hsuan Yang, Yung-Yu Chuang, Jia-Bin Huang

A Dark Flash Normal Camera

Zhihao Xia*, Jason Lawrence, Supreeth Achar

Efficient Large Scale Inlier Voting for Geometric Vision Problems

Dror Aiger, Simon Lynen, Jan Hosang, Bernhard Zeisl

Big Self-Supervised Models Advance Medical Image Classification

Shekoofeh Azizi, Basil Mustafa, Fiona Ryan*, Zachary Beaver, Jan Freyberg, Jonathan Deaton, Aaron Loh, Alan Karthikesalingam, Simon Kornblith, Ting Chen, Vivek Natarajan, Mohammad Norouzi

Physics-Enhanced Machine Learning for Virtual Fluorescence Microscopy

Colin L. Cooke, Fanjie Kong, Amey Chaware, Kevin C. Zhou, Kanghyun Kim, Rong Xu, D. Michael Ando, Samuel J. Yang, Pavan Chandra Konda, Roarke Horstmeyer

Retrieve in Style: Unsupervised Facial Feature Transfer and Retrieval

Min Jin Chong, Wen-Sheng Chu, Abhishek Kumar, David Forsyth

Deep Survival Analysis with Longitudinal X-Rays for COVID-19

Michelle Shu, Richard Strong Bowen, Charles Herrmann, Gengmo Qi, Michele Santacatterina, Ramin Zabih

MUSIQ: Multi-Scale Image Quality Transformer

Junjie Ke, Qifei Wang, Yilin Wang, Peyman Milanfar, Feng Yang

imGHUM: Implicit Generative Models of 3D Human Shape and Articulated Pose

Thiemo Alldieck, Hongyi Xu, Cristian Sminchisescu

Deep Hybrid Self-Prior for Full 3D Mesh Generation

Xingkui Wei, Zhengqing Chen, Yanwei Fu, Zhaopeng Cui, Yinda Zhang

Differentiable Surface Rendering via Non-Differentiable Sampling

Forrester Cole, Kyle Genova, Avneesh Sud, Daniel Vlasic, Zhoutong Zhang

A Lazy Approach to Long-Horizon Gradient-Based Meta-Learning

Muhammad Abdullah Jamal, Liqiang Wang, Boqing Gong

ViViT: A Video Vision Transformer

Anurag Arnab, Mostafa Dehghani, Georg Heigold, Chen Sun, Mario Lučić, Cordelia Schmid

The Surprising Impact of Mask-Head Architecture on Novel Class Segmentation (see the blog post)

Vighnesh Birodkar, Zhichao Lu, Siyang Li, Vivek Rathod, Jonathan Huang

Generalize Then Adapt: Source-Free Domain Adaptive Semantic Segmentation

Jogendra Nath Kundu, Akshay Kulkarni, Amit Singh, Varun Jampani, R. Venkatesh Babu

Unified Graph Structured Models for Video Understanding

Anurag Arnab, Chen Sun, Cordelia Schmid

The Many Faces of Robustness: A Critical Analysis of Out-of-Distribution Generalization

Dan Hendrycks, Steven Basart, Norman Mu, Saurav Kadavath, Frank Wang, Evan Dorundo, Rahul Desai, Tyler Zhu, Samyak Parajuli, Mike Guo, Dawn Song, Jacob Steinhardt, Justin Gilmer

Learning Rare Category Classifiers on a Tight Labeling Budget

Ravi Teja Mullapudi, Fait Poms, William R. Mark, Deva Ramanan, Kayvon Fatahalian

Composable Augmentation Encoding for Video Representation Learning

Chen Sun, Arsha Nagrani, Yonglong Tian, Cordelia Schmid

Multi-Task Self-Training for Learning General Representations

Golnaz Ghiasi, Barret Zoph, Ekin D. Cubuk, Quoc V. Le, Tsung-Yi Lin

With a Little Help From My Friends: Nearest-Neighbor Contrastive Learning of Visual Representations

Debidatta Dwibedi, Yusuf Aytar, Jonathan Tompson, Pierre Sermanet, Andrew Zisserman

Understanding Robustness of Transformers for Image Classification

Srinadh Bhojanapalli, Ayan Chakrabarti, Daniel Glasner, Daliang Li, Thomas Unterthiner, Andreas Veit

Impact of Aliasing on Generalization in Deep Convolutional Networks

Cristina Vasconcelos, Hugo Larochelle, Vincent Dumoulin, Rob Romijnders, Nicolas Le Roux, Ross Goroshin

von Mises-Fisher Loss: An Exploration of Embedding Geometries for Supervised Learning

Tyler R. Scott*, Andrew C. Gallagher, Michael C. Mozer

Contrastive Learning for Label Efficient Semantic Segmentation

Xiangyun Zhao*, Raviteja Vemulapalli, Philip Andrew Mansfield, Boqing Gong, Bradley Green, Lior Shapira, Ying Wu

Interacting Two-Hand 3D Pose and Shape Reconstruction from Single Color Image

Baowen Zhang, Yangang Wang, Xiaoming Deng, Yinda Zhang, Ping Tan, Cuixia Ma, Hongan Wang

Telling the What While Pointing to the Where: Multimodal Queries for Image Retrieval

Soravit Changpinyo, Jordi Pont-Tuset, Vittorio Ferrari, Radu Soricut

SO-Pose: Exploiting Self-Occlusion for Direct 6D Pose Estimation

Yan Di, Fabian Manhardt, Gu Wang, Xiangyang Ji, Nassir Navab, Federico Tombari

Patch2CAD: Patchwise Embedding Learning for In-the-Wild Shape Retrieval from a Single Image

Weicheng Kuo, Anelia Angelova, Tsung-Yi Lin, Angela Dai

NeRD: Neural Reflectance Decomposition From Image Collections

Mark Boss, Raphael Braun, Varun Jampani, Jonathan T. Barron, Ce Liu, Hendrik P.A. Lensch

THUNDR: Transformer-Based 3D Human Reconstruction with Markers

Mihai Zanfir, Andrei Zanfir, Eduard Gabriel Bazavan, William T. Freeman, Rahul Sukthankar, Cristian Sminchisescu

Discovering 3D Parts from Image Collections

Chun-Han Yao, Wei-Chih Hung, Varun Jampani, Ming-Hsuan Yang

Multiresolution Deep Implicit Functions for 3D Shape Representation

Zhang Chen*, Yinda Zhang, Kyle Genova, Sean Fanello, Sofien Bouaziz, Christian Hane, Ruofei Du, Cem Keskin, Thomas Funkhouser, Danhang Tang

AI Choreographer: Music Conditioned 3D Dance Generation With AIST++ (see the blog post)

Ruilong Li*, Shan Yang, David A. Ross, Angjoo Kanazawa

Learning Object-Compositional Neural Radiance Field for Editable Scene Rendering

Bangbang Yang, Han Zhou, Yinda Zhang, Hujun Bao, Yinghao Xu, Guofeng Zhang, Yijin Li, Zhaopeng Cui

VariTex: Variational Neural Face Textures

Marcel C. Buhler, Abhimitra Meka, Gengyan Li, Thabo Beeler, Otmar Hilliges

Pathdreamer: A World Model for Indoor Navigation (see the blog post)

Jing Yu Koh, Honglak Lee, Yinfei Yang, Jason Baldridge, Peter Anderson

4D-Net for Learned Multi-Modal Alignment

AJ Piergiovanni, Vincent Casser, Michael S. Ryoo, Anelia Angelova

Episodic Transformer for Vision-and-Language Navigation

Alexander Pashevich*, Cordelia Schmid, Chen Sun

Graph-to-3D: End-to-End Generation and Manipulation of 3D Scenes Using Scene Graphs

Helisa Dhamo, Fabian Manhardt, Nassir Navab, Federico Tombari

Unconditional Scene Graph Generation

Sarthak Garg, Helisa Dhamo, Azade Farshad, Sabrina Musatian, Nassir Navab, Federico Tombari

Panoptic Narrative Grounding

Cristina González, Nicolás Ayobi, Isabela Hernández, José Hernández, Jordi Pont-Tuset, Pablo Arbeláez

Cross-Camera Convolutional Color Constancy

Mahmoud Afifi*, Jonathan T. Barron, Chloe LeGendre, Yun-Ta Tsai, Francois Bleibel

Defocus Map Estimation and Deblurring from a Single Dual-Pixel Image

Shumian Xin*, Neal Wadhwa, Tianfan Xue, Jonathan T. Barron, Pratul P. Srinivasan, Jiawen Chen, Ioannis Gkioulekas, Rahul Garg

COMISR: Compression-Informed Video Super-Resolution

Yinxiao Li, Pengchong Jin, Feng Yang, Ce Liu, Ming-Hsuan Yang, Peyman Milanfar

Mip-NeRF: A Multiscale Representation for Anti-Aliasing Neural Radiance Fields

Jonathan T. Barron, Ben Mildenhall, Matthew Tancik, Peter Hedman, Ricardo Martin-Brualla, Pratul P. Srinivasan

Nerfies: Deformable Neural Radiance Fields

Keunhong Park*, Utkarsh Sinha, Jonathan T. Barron, Sofien Bouaziz, Dan B Goldman, Steven M. Seitz, Ricardo Martin-Brualla

Baking Neural Radiance Fields for Real-Time View Synthesis

Peter Hedman, Pratul P. Srinivasan, Ben Mildenhall, Jonathan T. Barron, Paul Debevec

Stacked Homography Transformations for Multi-View Pedestrian Detection

Liangchen Song, Jialian Wu, Ming Yang, Qian Zhang, Yuan Li, Junsong Yuan

COTR: Correspondence Transformer for Matching Across Images

Wei Jiang, Eduard Trulls, Jan Hosang, Andrea Tagliasacchi, Kwang Moo Yi

Large Scale Interactive Motion Forecasting for Autonomous Driving: The Waymo Open Motion Dataset

Scott Ettinger, Shuyang Cheng, Benjamin Caine, Chenxi Liu, Hang Zhao, Sabeek Pradhan, Yuning Chai, Ben Sapp, Charles R. Qi, Yin Zhou, Zoey Yang, Aurélien Chouard, Pei Sun, Jiquan Ngiam, Vijay Vasudevan, Alexander McCauley, Jonathon Shlens, Dragomir Anguelov

Low-Shot Validation: Active Importance Sampling for Estimating Classifier Performance on Rare Categories

Fait Poms, Vishnu Sarukkai, Ravi Teja Mullapudi, Nimit S. Sohoni, William R. Mark, Deva Ramanan, Kayvon Fatahalian

Vector Neurons: A General Framework for SO(3)-Equivariant Networks

Congyue Deng, Or Litany, Yueqi Duan, Adrien Poulenard, Andrea Tagliasacchi, Leonidas J. Guibas

SLIDE: Single Image 3D Photography with Soft Layering and Depth-Aware Inpainting

Varun Jampani, Huiwen Chang, Kyle Sargent, Abhishek Kar, Richard Tucker, Michael Krainin, Dominik Kaeser, William T. Freeman, David Salesin, Brian Curless, Ce Liu

DeepPanoContext: Panoramic 3D Scene Understanding with Holistic Scene Context Graph and Relation-Based Optimization

Cheng Zhang, Zhaopeng Cui, Cai Chen, Shuaicheng Liu, Bing Zeng, Hujun Bao, Yinda Zhang

Infinite Nature: Perpetual View Generation of Natural Scenes from a Single Image

Andrew Liu, Richard Tucker, Varun Jampani, Ameesh Makadia, Noah Snavely, Angjoo Kanazawa

Workshops (only Google affiliations are noted)

Visual Inductive Priors for Data-Efficient Deep Learning Workshop

Speakers: Ekin Dogus Cubuk, Chelsea Finn

Instance-Level Recognition Workshop

Organizers: Andre Araujo, Cam Askew, Bingyi Cao, Jack Sim, Tobias Weyand

Unsup3D: Unsupervised 3D Learning in the Wild

Speakers: Adel Ahmadyan, Noah Snavely, Tali Dekel

Embedded and Real-World Computer Vision in Autonomous Driving (ERCVAD 2021)

Speakers: Mingxing Tan

Adversarial Robustness in the Real World

Speakers: Nicholas Carlini

Neural Architectures: Past, Present and Future

Speakers: Been Kim, Hanxiao Liu Organizers: Azade Nazi, Mingxing Tan, Quoc V. Le

Computational Challenges in Digital Pathology

Organizers: Craig Mermel, Po-Hsuan Cameron Chen

Interactive Labeling and Data Augmentation for Vision

Speakers: Vittorio Ferrari

Map-Based Localization for Autonomous Driving

Speakers: Simon Lynen

DeeperAction: Challenge and Workshop on Localized and Detailed Understanding of Human Actions in Videos

Speakers: Chen Sun Advisors: Rahul Sukthankar

Differentiable 3D Vision and Graphics

Speakers: Angjoo Kanazawa

Deep Multi-Task Learning in Computer Vision

Speakers: Chelsea Finn

Computer Vision for AR/VR

Speakers: Matthias Grundmann, Ira Kemelmacher-Shlizerman

GigaVision: When Gigapixel Videography Meets Computer Vision

Organizers: Feng Yang

Human Interaction for Robotic Navigation

Speakers: Peter Anderson

Advances in Image Manipulation Workshop and Challenges

Organizers: Ming-Hsuan Yang

More Exploration, Less Exploitation (MELEX)

Speakers: Angjoo Kanazawa

Structural and Compositional Learning on 3D Data

Speakers: Thomas Funkhouser, Kyle Genova Organizers: Fei Xia

Simulation Technology for Embodied AI

Organizers: Li Yi

Video Scene Parsing in the Wild Challenge Workshop

Speakers: Liang-Chieh (Jay) Chen

Structured Representations for Video Understanding

Organizers: Cordelia Schmid

Closing the Loop Between Vision and Language

Speakers: Cordelia Schmid

Segmenting and Tracking Every Point and Pixel: 6th Workshop on Benchmarking Multi-Target Tracking

Organizers: Jun Xie, Liang-Chieh Chen

AI for Creative Video Editing and Understanding

Speakers: Angjoo Kanazawa, Irfan Essa

BEHAVIOR: Benchmark for Everyday Household Activities in Virtual, Interactive, and Ecological Environments

Speakers: Chelsea Finn Organizers: Fei Xia

Computer Vision for Automated Medical Diagnosis

Organizers: Maithra Raghu

Computer Vision for the Factory Floor

Speakers: Cordelia Schmid

Tutorials (only Google affiliations are noted)

Towards Robust, Trustworthy, and Explainable Computer Vision

Speakers: Sara Hooker

Multi-Modality Learning from Videos and Beyond

Organizers: Arsha Nagrani

Tutorial on Large Scale Holistic Video Understanding

Organizers: David Ross

Efficient Video Understanding: State of the Art, Challenges, and Opportunities

Organizers: Arsha Nagrani

* Indicates work done while at Google

SkyNet isn’t coming for you — but excel might be

A few years ago, I was working on an artificial intelligence startup called AlphaMorph. We worked on a kind of AI known as genetic algorithms (think evolution, not brains) and were using the wonderful little game Airships: Conquer the Skies as a testing environment. The goal of the game was to design digital airships and command them to victory. Each ship was made of dozens of components (cannons, sails, balloons) which the player could link up.

In one of our trials, we gave the algorithm a simple instruction: defeat as many enemy vessels as possible (in 1v1 rounds) with the cheapest possible airship. After generations of trial and error, and days of computer time, AlphaMorph produced its answer: a tiny ship which would fly above enemies, attach itself via harpoon, and fire missiles point blank into their hull.

This strategy was devastatingly effective — but it was also suicidal.

In nearly every trial, the AI-produced ship would destroy itself only seconds after the enemy fell. To our human eyes, this appears to be a failure. Afterall, in both the game and real life, a “victory” which requires self-destruction is hardly a victory at all. But to the AI, this solution was ideal. Its instructions were to destroy as many ships as possible as cheaply as possible. It did that — and only that.

Self-survivability was not included in the instructions, and so it was ignored.

In the world of AI, the definition of “intelligence” is fairly controversial. To most people, intelligence means adaptability, some degree of self-awareness, and the ability to carry prior knowledge into new situations. If applied to AI, however, barely any (if any at all) machine intelligences would meet those requirements.

From an enemy in a video game, to an excel model, to a genetic algorithm, the vast majority of “AIs” in use today are what could be called “dumb.” Most every video game AI simply runs down a checklist of “if-then” reactions to the player, most excel algorithms are little more than equations calculated in series, and genetic algorithms are no more intelligent than Darwinian evolution. In short, most AIs cannot adapt, they cannot change their program, and they cannot truly learn or remember; it’s certainly artificial, but it’s not particularly intelligent after all.

But that doesn’t mean “dumb AI” is weak — or safe.

Trending AI Articles:

1. Why Corporate AI projects fail?

2. How AI Will Power the Next Wave of Healthcare Innovation?

3. Machine Learning by Using Regression Model

4. Top Data Science Platforms in 2021 Other than Kaggle

Every day, across the world, millions of decisions are outsourced to algorithms (one kind of “dumb AI” we discussed above). Excel spreadsheets run numbers and tell analysts how an advertisement, service, downturn, or market shift impacted business. And, because nearly every institution we have is dedicated to generating profit, most of these dumb AIs spend their days telling human beings what is or is not profitable.

Moral considerations never enter these models. Algorithms, like all dumb AI, are only able to play by the rules we write and strive for the goals we set. If a corporation uses algorithms to increase profits, they will do just that — no matter the cost.

This is dangerous for two reasons. For one, it means giving immense power to undemocratic, unintelligent, and truly amoral machines. For another, it absolves individual humans of having to face moral choices. A rideshare company’s pricing algorithm can determine, rightly or wrongly, that a surge in requests for rides is an opportunity for profit, and increase the cost as a result. Done without direct human oversight, this allows companies like Uber to extract as much profit as possible from, say, a concert which has recently ended and generated a large pool of demand in one area. No person is asked to justify the increases, and if they were, they could just point to the math and shrug.

In most situations, exporting such a decision to an algorithm (or any form of dumb AI) is mostly just annoying. Sure it’s no fun to pay more to leave, but it’s hardly life and death. Except, one day, it was.

After a group of knife-wielding terrorists drove into crowds on London Bridge, Uber’s algorithm noticed an opportunity. Hundreds of requests came surging in for rides away from the danger and its aftermath, and the algorithm dutifully increased rates as a result. What had been an annoyance in one situation (likely the situation Uber’s developers had considered when programming this AI) became a robbery in another — your money or your life.

After this incident, there was a massive and understandable backlash against Uber. It’s not clear whether their surge pricing algorithm was changed in any way, but at least the harm it caused was noticed, highlighted, and organized against. But this is an extreme example of harm done by dumb AIs. The vast majority of similar decisions, each with the capacity to do damage and each with little direct human oversight, are never acknowledged by their victims or perpetrators.

What about a salary sheet that recommends who gets raises? A productivity algorithm that leaves a warehouse understaffed? A supply chain monitor that proposes leveling another acre for palm oil? Who objects to, or even notices, the AIs making those calls?

Millions of little choices, all made by dispassionate, unintelligent, and unbiased machines completing their instructions perfectly every day. Each one is told to maximize shareholder profit and each does so immaculately — until one day we are left with an uninhabitable planet, replete with suffering, and containing whatever remains of the servers housing those imagined profits.

The idea of a human-like intelligence deciding humanity should be destroyed is romantic. It’s roughly akin to a malevolent god deciding to punish humanity for our sins.

The more probable horror, however, is far less story-esque. Dumb algorithms — those which may adapt but ultimately seek to achieve very simply instructions — are far more likely to be our downfall. The instruction “generate profit” or “create paperclips,” if given without sufficient limitations (limitations humans may not be able to predict, much less implement) could result in any number of horrors all wrought in pursuit of the simplest goals. Human lives, a habitable climate, or concern for ecologies do not matter to algorithms by default. They have to be coded in, either as an instruction or as a rule — and if we aren’t comprehensive enough, they may well be ignored.

AlphaMorph was not evil or even wrong, it was misled. I misled it. By providing incomplete instructions, I left the algorithm with an opportunity to find “solutions” no human would accept.

Similarly, it’s not that excel, or evolution, or programming, or math is evil, it’s that we do not understand the power we’ve given it, the holes in our instructions, or the limitations of our code. Any human future will need some kind of dumb AI assistance. Whether post-apocalyptic or hyper-futuristic, dumb AIs are excellent at completing repeated tasks or helping humans find optimal solutions. But they are a tool, not a cure-all.

Intelligence has the capacity for fundamental re-evaluation and change. Algorithms do not. An intelligence might be wrong, or even evil, but “dumb algorithms” are something much more dangerous.

They are inevitable.

If given the power, they will simply and unthinkingly execute their instructions; if they execute us in the process, they won’t even notice.

Special thanks to Phasma Landrum for suggesting this topic.

Don’t forget to give us your 👏 !

The Dangers of Dumb AI was originally published in Becoming Human: Artificial Intelligence Magazine on Medium, where people are continuing the conversation by highlighting and responding to this story.

In the future, advanced cybersecurity solutions will be created by combining artificial intelligence with human intelligence. Artificial intelligence will make it easier to combat cybercrime and cyberattacks. AI has a lot of potential in the transportation and manufacturing industries.

Artificial intelligence will spur innovation and have a big impact across a variety of industries.

Artificial intelligence has had a big impact on a range of industries in recent years and will continue to do so in the future. As a consequence of the pandemic-induced acceleration of technology adoption, many businesses, both private and public, are utilising AI for their advantage and growth. In recent years, AI has enabled many advances and accelerated the spread of technologies such as IoT, robotics, analytics, and voice assistants.

Artificial Intelligence’s Impact

Artificial intelligence will make it easier to combat cybercrime and cyberattacks. AI has a lot of potential in the transportation and manufacturing industries. In the coming years, we may witness optimal advancement and commercialization of smart and autonomous vehicles. Self-driving cars are currently on the market, but in the next two to three decades, more people will use them. AI will also help the manufacturing business.

Thanks to artificial intelligence, healthcare systems will be able to track and monitor patients in real-time, as well as get genetic data and learn about each person’s lifestyle. Algorithms will be in charge of detecting diseases and making relevant recommendations.

Is Artificial Intelligence (AI) Going to Destroy Human Labor?

This is a concern that has hovered over artificial intelligence for a long time. Elon Musk and Stephen Hawkings, among other experts and industrial giants, have warned mankind about the technology’s bad consequences and hazards. One of the most often claimed consequences is that AI will replace human labour, resulting in major job losses. This claim is grossly overstated, according to experts, and while AI may replace humans in some occupations, it will not replace the whole workforce. Picking and packing products, sorting and separating commodities, responding to recurring client inquiries, and other regular activities and repetitive operations are all part of the job.

Trending AI Articles:

1. Why Corporate AI projects fail?

2. How AI Will Power the Next Wave of Healthcare Innovation?

3. Machine Learning by Using Regression Model

4. Top Data Science Platforms in 2021 Other than Kaggle

Should We Be Worried About AI’s Future?

AMOLF’s Soft Robotic Matter section has published research demonstrating how self-learning robots can quickly adapt to changing circumstances. These little robotic components were connected together in order for them to learn to move independently.

As a result, AI may not be a threat to human life as a whole. However, there is a possibility that someone will make use of the technology’s capabilities to harm others. Combat robots, for example, might be used to deliver harmful intents via their algorithms. As a result, it will be critical in the next years to establish an ethical AI environment devoid of human prejudices, which may aid in development.

Don’t forget to give us your 👏 !

What role will artificial intelligence play in improving the future? was originally published in Becoming Human: Artificial Intelligence Magazine on Medium, where people are continuing the conversation by highlighting and responding to this story.

Source: What role will artificial intelligence play in improving the future?

Date: 15 October 2021, 9:56 am

Shoppers have been known to abandon their shopping carts seeing the long queues at the counters. In spite of the amazing strides the retail industry has seen, what customers want is freedom from the endless lines at the checkout counters for a seamless shopping experience.

Checkout-free technology has brought a new experience to the retail environment. The automated system recognizes products and bills the customer accordingly. This means less waiting time for the shopper and a quicker service as compared to conventional shopping checkout lanes.

Using AI-powered cameras and software, computer vision is changing the way people interact with the physical world. Computer vision and AI are ultimately going to have an impact that goes far beyond retail autonomous driving, manufacturing, offices, gyms to fundamentally alter and better the way we live.

What is Autonomous Checkout?

The system is making use of a combination of computer vision, affordable ceiling-based cameras, and a precise in-store navigation map to detect the actions performed by each customer entered.

Customers can have their faces scanned by facial recognition software before entering the store or are required to swipe a card before entering. By understanding the interactions of customers and seeing the movement of products is enabling a checkout-less experience. Whether in retail locations or worksites, users can grab a selection of items and walk away, while the system takes care of recording the transaction.

Using auto-checkouts in stores is a win-win strategy for both customers and retailers. More staff can be employed to help customers shop, rather than spending the company’s resources on cashiers’ manual labor. A frictionless shopping experience is a driving factor for retailers to strive for cashier-less stores.

Trending AI Articles:

1. Why Corporate AI projects fail?

2. How AI Will Power the Next Wave of Healthcare Innovation?

3. Machine Learning by Using Regression Model

4. Top Data Science Platforms in 2021 Other than Kaggle

How it Works

Session Start

Shopping sessions can start in a variety of ways depending on the retailer’s preference. In a standard setup, customers initiate a transaction at an entry gate using a personal QR code from an app. Facial recognition can also be used for identification. Other setups can be configured without an entry gate or even without an app.

Customer Detected

Upon entering the store, strategically placed cameras capture the scene. Deep learning models running on local servers to detect humans in these video feeds.

Anonymous Tracking

When a shopping session is started, customers are assigned a random ID. A central server uses this to track each shopper throughout the store as they pass through from camera to camera.

Product Selection

Using deep learning models trained on product and positioning data from Product Mapper software, the system determines when customers interact with products & whether to add or subtract that item from their cart.

Check-Out

Upon leaving the store customers are charged via their digital wallet, receiving a receipt via email or text. In other configurations, a POS kiosk may auto-populate the customer’s cart for checkout, allowing use of conventional payment methods such as cash, credit, etc.

Conclusion

The disruptive potential of AI-powered checkout systems is here for brick-and-mortar stores to adapt to this new shopping experience. Fewer cashiers reduced checkout lines and reinvented shopping carts will redefine the customer experience. Amplified by machine learning, image recognition, sensors, and deep learning algorithms, frictionless checkout systems are a long-lasting technology. Autonomous checkout technology will reduce labor costs, improve customer experience and improve profit margins for retailers.

Clearly, there is a need for autonomous checkout technology from both a shopper and retailer perspective. However, the major point of focus for retailers should always be the customer’s in-store experience and how they can enhance this through the implementation of autonomous checkout. By being able to improve this experience, shoppers will in turn value those brands that are taking extra steps to put the shopper’s needs first.

Don’t forget to give us your 👏 !

How AI powers Self-Checkout for Retail was originally published in Becoming Human: Artificial Intelligence Magazine on Medium, where people are continuing the conversation by highlighting and responding to this story.

I recently worked on an end-to-end project that involves accessing real-world data and information from an on-chain Ethereum smart contract. I’d like to explain the importance of this process for future applications as well as explain the process a bit as I find it extremely groundbreaking. I will be explaining this process following the Chainlink protocol architecture as that is what I had used learning the process.

The path that data takes from a regular (and often centralized) data source is this: Data Source API → External Adapter → Chainlink Job (Created by a node operator) → Chainlink Oracle smart contract (or aggregator contract) → Your smart contract trying to use this data for whatever purposes it needs.

Let’s chat about why this is initially interesting overall, but I’m sure as I explain the process, we’ll go into further use cases that underline why I use the word ‘groundbreaking’.

Why?

Smart contracts that live ‘on-chain’ need gas to be decentralized and to execute the operations and functions they were designed for. This ‘gas’ is comparable to computing power, or even more basically, plugging our computer into an outlet in the wall. Electricity flows through the outlet and into your computer and the computer uses that energy as fuel to run whatever code you write on your local machine.

So gas, is what makes the decentralized universe run as it gives incentive to any individuals who stake a token or miners in a blockchain network (which of course is dependent on whether that blockchain runs a proof-of-stake or proof-of-work algorithm).

In the end, this means it is extremely expensive to do operations that might take a lot of computing power. Protocols like Chainlink work to fix this issue by using their infrastructure to allow for these smart contracts to pull this data quickly in one inexpensive operation On-chain, while the bulk of the expensive computation is done Off-chain. For an example, think of how expensive running a large AI neural network is and how much computing power that can require. Wouldn’t you rather not take a mortgage on your home just to pay for the gas to run it once?

BONUS FEATURE: As Chainlink has leveled up, the architecture has gotten more efficient and more decentralized. When data passes through these aggregator nodes, the reason they are called ‘aggregator’ nodes is because they truly aggregate the data and basically check any data’s validity by running the data against other nodes around the world and reach a consensus on whether the data coming On-Chain for the smart contracts to use is accurate. I don’t feel a need to go further into why that is important.

Accessing Real World Data and Your External Adapter

Let’s get to this specific example and the technicals.

The data we want to reach is housed from an API that we can access. So, our next step is to build what is called an external adapter. This adapter itself is run as its own API through Node.js using Express and Typescript. We access the JSON response from calling the API and specify within our adapter the endpoints and information that we will ultimately be retrieving from the data source.

It’s pretty much that simple to draw from the API, now you have the data in your external adapter to use how you wish. I also want to mention that in this external adapter API, you could make it as large as a project as you wish, and for instance, here is where you could set up your AI neural network or some quantum computing algorithm if you wanted. Ultimately, the data that you are happy with here, you will be sending on its way for the smart contract to use.

Once the data is prepared and ready to send on its way, you must ‘dress up’ the data in the right way for a Chainlink node to read it in its own language. Thankfully, Chainlink makes this part pretty simple, as its fairly standard for most external adapters, through their templates which they offer up in their documentation (linked below in references)

Zoom! The data is now moving on to your chainlink node.

Trending AI Articles:

1. Why Corporate AI projects fail?

2. How AI Will Power the Next Wave of Healthcare Innovation?

3. Machine Learning by Using Regression Model

4. Top Data Science Platforms in 2021 Other than Kaggle

The Chainlink Node Operator And Oracle Contract

In this project, I set up my own Chainlink development environment and local Chainlink node for quick testing purposes. This is also technically good as the more nodes on the network push for the ideology of decentralization.

Once my node was up and running, I logged in as an operator and created what is called a ‘Bridge’. This Bridge basically just identified the external adapter I just wrote as an official adapter and made it readily available for my use in any ‘Job’ that I wanted to write. Just think of a Job as the translator between smart contracts and Typescript External Adapters.

To create a Job, we must write a Job spec, that basically just is a pipeline that we feed our data coming in through and makes it easier for the Ethereum (or whatever blockchain you are using) to understand your data.

In order to do this, we must place our Bridge adapter in the Job spec as well as other necessary Chainlink ‘Core’ Adapters which are there for us to use to complete any other operations on our data (i.e. converting our data to uint256 etc.).

In the past, this spec was written in JSON, but with the new versions of Chainlink, specs are now written in TOML, so read up on that if you’re interested in running nodes and creating your own jobs.

One last thing to note, is that here is also a good point to deploy your ‘Oracle Contract’, which you’ll need to successfully finish the job and you’ll also need it in the next step when referring to your job in your smart contract. Luckily, Chainlink also makes this fairly simple through a nice template in their documentation as well as it is a simple contract. You can easily deploy right from Remix IDE and then grab the contract address from Etherscan.io.

The Consumer Smart Contract

Finally, we’ve reached the destination.

Think of ‘Consumer Contract’ as just being the smart contract that is trying to access the real-world API data. There are great templates already out there for the parts of the contract that need to ‘grab’ the data from the Job spec as it runs through the Oracle contract (also in Chainlink documentation below).

You’ll just need to know enough solidity to write any functions that will pull the endpoints, or specific data from the JSON responses from the external adapters. Then feel free to do however you please with the data!

Conclusion

The future holds so many use cases for smart contracts and this idea of Oracles and basically bridging the scary gap of computation and data as we collect Off-chain and placing that On-chain for the blockchain to do its thing. The architecture of this process is always improving and being worked on every day. Another great benefit is that many of these Oracle protocols are ‘Blockchain-Agnostic’ meaning that they don’t rely on Ethereum specifically to succeed. New blockchains are being created all the time and this architecture is improving to be compatible with new blockchains all the time. This is awesome for true decentralization!

References

https://docs.chain.link/ — chainlink documentation

https://github.com/smartcontractkit/external-adapters-js — chainlink external-adapter typescript template-repo

https://www.gemini.com/cryptopedia/what-is-chainlink-and-how-does-it-work#section-where-do-link-tokens-fit-in — Better help in understanding the process of Chainlink or Other oracle services.

https://docs.chain.link/docs/fulfilling-requests/ — Fulfilling Node Requests and Oracle Contract Deployment

Don’t forget to give us your 👏 !

The Groundbreaking Bridge Between Real World Data and Smart Contracts was originally published in Becoming Human: Artificial Intelligence Magazine on Medium, where people are continuing the conversation by highlighting and responding to this story.

Source: The Groundbreaking Bridge Between Real World Data and Smart Contracts

Date: 14 October 2021, 10:25 am

Artificial Intelligence (AI) is everywhere — in our phones, in our offices, in our cars, and pretty much everything else one can imagine. So, it only makes sense this technology has made inroads into the world of e-commerce as well, which happens to be one of the most popular industries in the world at the moment. It is revolutionizing eCommerce for small and big businesses where it is being used by various retail companies to develop a better understanding of their customers.

Despite the popularity of the sector, it is starting to feel the need for better and advanced tools that can help address some of the more complex challenges. You would agree AI-powered commerce enables customer-centric online searches, identifies prospective customers, answers customers’ queries, simplifies sales techniques, establishes actual conversations with customers through chatbots, etc.

Suffice it to say that AI is more than ready to do that and so much more.

1. Efficient sales: The thing about the modern-day customer is that they are very finicky and thus, quite challenging to win over. Thankfully, artificial intelligence is well-equipped to take on this challenge; when integrated with the e-commerce retailer’s CRM system, AI tools can tap into other advanced tools such as voice input, natural language learning, etc. to drive better customer service operations via answering customer queries, identifying new sales opportunities, etc. While certain CRM systems integrated with AI may be able to handle only some of the aforementioned tasks, a newer crop of systems can successfully execute all these functions with absolute ease.

2. Improve customer targeting: Today, the market is brimming with avant-garde AI tools and solutions that empower e-commerce companies with exceptional data and intelligence to better target their audience and improve lead generation among other things. These AI solutions for e-commerce retailers’ sales, CRM, and marketing systems help generate a significantly better quality of leads by making use of the data captured from across the company’s systems. This data helps companies find high-quality prospects, which empowers their sales teams to garner new business and more sales.

Trending AI Articles:

1. Why Corporate AI projects fail?

2. How AI Will Power the Next Wave of Healthcare Innovation?

3. Machine Learning by Using Regression Model

4. Top Data Science Platforms in 2021 Other than Kaggle